Introduction

Imagine questioning an A.I about why it decided something… and its replies in a witty way like “Trust me bro.” 😄

Welcome to the black box A.I., a digital world where we never quite know what makes the decisions, as we trust machines, that they provide us with good answers, and they’re often surprisingly effective, and its like magic. However, its almost next to impossible to be interpreted by humans.

Why should you care?

Because Black Box A.I. is already making decisions that affect business approvals, medical diagnoses, hiring, finance, and even supply chains.

Its accuracy and speed are impressive, but like many such innovations, its mysteriousness should cause us to ask difficult questions like is it really trustworthy.

In this guide, we’ll pop the lid open on Black Box AI (relax, no screwdrivers necessary for opening this box 😄). You’ll find out what it is, why there’s a need for it, where the need occurs and in which cases businesses have to consider performance against explainability. Let’s start cutting away the mystery, one layer at a time.

What is Black Box AI?

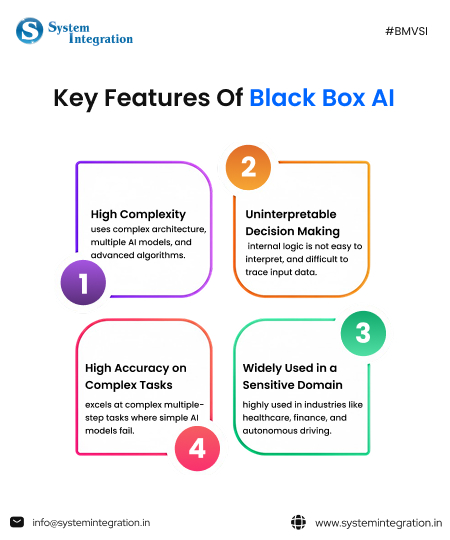

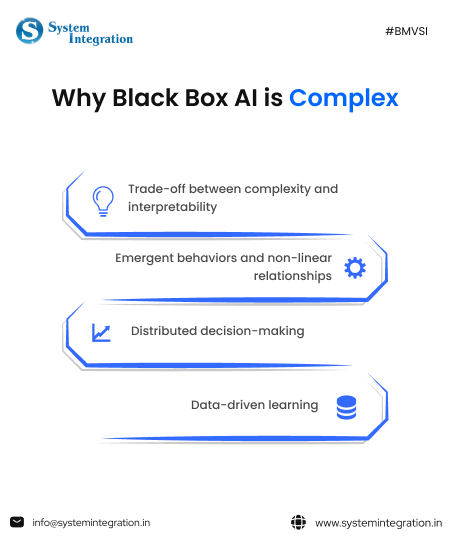

To be honest, it is somewhat mysterious, which is why it sounds that way. Imagine asking an AI a question and receiving a confident response, but not knowing exactly how it got there. In a nutshell, that is black box AI. Although the model functions, produces outcomes, and even performs exceptionally well, humans are still largely unaware of the internal decision-making process. Which makes AI/ML consultation very vital element for companies that are willing to understand it for their internal software.

Deep neural networks and other sophisticated machine learning models are usually the cause of this. At breakneck speed, they analyse vast volumes of data, spot trends, and formulate predictions. What is the catch? Sometimes, even the engineers who constructed them are unable to explain the reasons behind a particular output. It’s similar to putting your faith in a very intelligent friend who never demonstrates their maths skills.

| Why black box AI raise concerns? 👉 because it has high accuracy with low explainability 👉 Decisions may be difficult to audit, debug or justify 👉 Raises questions in heavily regulated industries such as healthcare and finance 👉 Makes bias detection more challenging 👉 Drives the need for more explainable AI options |

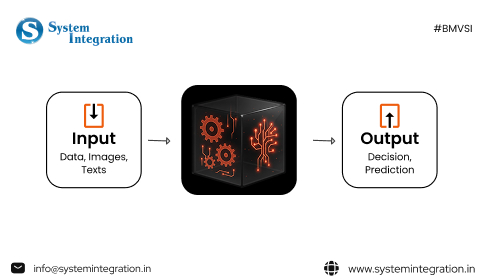

How does Black Box AI Work?

1) Deep Learning Architecture

Deep learning models with multiple hidden layers are at the heart of black box. Each layer handles extracted data in a slightly different way, and draws patterns that are more abstract further down the system.

But when the data hits the last layer, the AI has turned raw inputs into a confident decision without betraying what it specifically did to get to that point.

2) Neural Network Training

AI in a black box learns by example. In training, it is given huge data sets and then tunes millions (or sometimes billions) of parameters to minimize errors.

The model continues to fine-tune itself via methods like backpropagation until it’s very good at predicting outcomes often better than humans, in fact, but much ‘deeper,’ more complex and harder to explain.

3) Self Learning Mechanisms

Some black box models still learn on new data once they are deployed. They mutate, they winnow patterns, and they evolve performance.

That self-learning ability is what makes the black box A.I. so potent and unpredictable because it keeps setting new internal logic beyond rule sets that people have written for it.

Some Real World Examples of Black Box AI

📈 Financial Trading Algorithms

These AI software look through tremendous amounts of market data, news and historical trends at lightning speed to make trading decisions in fractions of a second.

They usually do better than human traders, but it’s often nearly impossible to explain in plain language why a trade was made.

| Key insights 🧐 Even the makers cannot fully predict behaviour without extensive testing. Models develop their own internal logic from training data patterns. |

👩🦰 Facial Recognition Systems

All these integrated AI models are used in smartphones, surveillance and security systems to recognize faces by learning faint patterns in images.

The challenge? They can identify a face correctly, but cannot describe clearly what features came into consideration to make the choice.

🩺 Medical Diagnostic Systems

One useful tool is black box artificial intelligence, including agentic AI tools, which can analyse X-rays and MRIs as well as laboratory reports to detect diseases with remarkable accuracy.

Doctors may believe the result, but still require transparency to know why the AI flagged one diagnosis over another.

🚗 Autonomous Vehicles

By contrast, driverless cars depend on black box to interpret those sensors and drive the car in real time.

From the braking to the lane changes, they’re quick and effective though in each case the calculus that led to it isn’t always immediately apparent.

How to Address Black Box AI Issues

- Explanable AI: Explanable AI models are being integrated by developers to address the issue of black box and make it more transparent. This process aims to provide users the information about how decisions are made to increase trustability.

- Auditing and Testing: upgradation of AI models is vital part to detect biases and ensure fairness. Sensitivity analysis and audits are used to understand the impact of AI on different groups.

- Regulations: laws, guidelines and government regulations are applied to address the challenges of black box and to increase the trustworthiness and transparency.

- Responsing AI: promoting responsible AI practices focuses on transparency, fairness and ethical considerations in AI development and deployment.

The Future of Black Box AI

➔ Giving context to mystery and ‘black box’ AI models: The next phase in Black Box is explainable and interpretable systems, where decisions are not only made but also well understood with clear reasoning, no more “because the model said so.”

➔ Regulatory pressure will demand transparency: Governments and regulators will more and more require AI explainability, particularly in high-risk domains like healthcare, finance or hiring and Black Box models will be forced to explain their outputs.

➔ Hybrid AI will be standard: Enterprises will mix high-performance models that are black boxes with explainable layers on top, trading accuracy for trust, auditability and compliance.

➔ Trust will itself be a competitive commodity: Businesses that can show and tell how their A.I. system reaches conclusions will gain customer confidence, while opaque models could encounter pushback or limited uptake.

➔ AI debugging and governance will get better: Advanced monitoring tools will be developed to track decisions, spot bias, and flag anomalies—even within complex black box systems.

➔ Black Box AI will not go away but it will be watched: Instead of being entirely dark, future black box systems will work under human supervision, ethical frameworks and regular validation to guarantee responsible use.

Summarizing Thoughts

The future of AI lies in choosing between black box AI and transparent and fair AI models. However, you can also take full control of this issue if you develop your own custom AI model.

For this, you can partner with the best AI development company in India, they have a team of experts who can help in developing your custom, scalable and trustworthy AI model in a very short time and provide regular maintenance post launch.

FAQs

But whether it’s safe or not, it depends on the type of model you are using. As every models have different guidelines. Before you start using any AI tool, read its guidelines carefully and then press that “I Agree” button.

Yes, ChatGPT can be considered as a black box AI, as its internal decision making are not fully transparent and explainable, making it difficult to understand how it came up with the output.

- Bias desicion

- Accountability

- Have high complexity

- Security concerns

Black box AI: prioritize perfomance and complexity over interpretability

XAI: designed so that humans can understand it and also check its reasoning behind every step.

It is a type of artificial intelligence that is used to create new content based on user demand. Typically is it is considered a black box AI as nobody really knows, and it’s difficult to even trace how it came up with that output.