Introduction

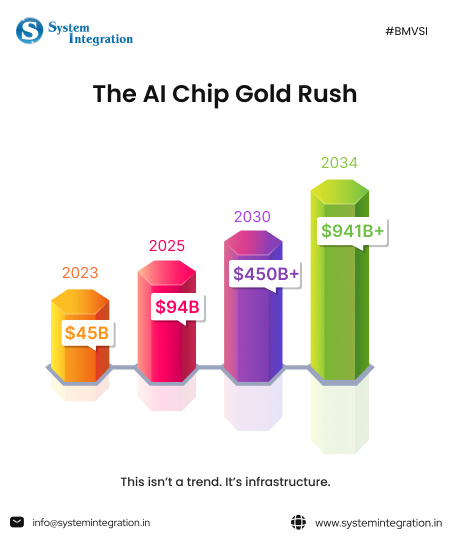

If you thought the CPU vs GPU battles of the early 2000s were intense, hold onto your coffee because the battle of dominance in AI chips is like Tech Davos on steroids.

In 2026, the semiconductor battlefield looks like an MCU crossover: Nvidia, AMD, Qualcomm, and a parade of specialized ASICs duking it out for AI inferencing and training supremacy. But unlike comic book mayhem, there’s real money and real consequences behind these silicon showdowns. So, what’s going on and why should you care?

Let’s break it down in plain language.

What is an AI Chip, Anyway?

Before we grill the contenders, let’s talk basics.

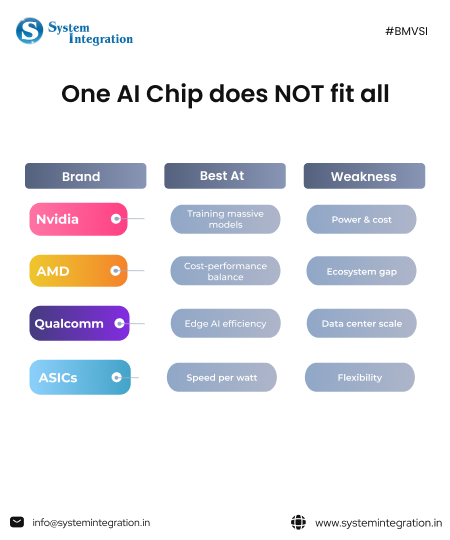

An AI chip is a semiconductor designed to accelerate artificial intelligence workloads things like training large language models (LLMs), running real-time neural nets, analyzing images, inference at the edge, and beyond. These chips are not your grandma’s CPUs. They’re built for parallel processing, matrix multiplications, and blistering efficiency, think of them as Hulk to a CPU’s Bruce Banner.

There are mainly three classes:

- GPUs (Graphics Processing Units): Originally for gaming, now the bread and butter of AI datacenters.

- ASICs (Application-Specific Integrated Circuits): Custom silicon built for ONE purpose: AI. Think Google TPUs.

- Edge AI chips from mobile players like Qualcomm: built to bring AI smartness to laptops, phones, cars.

This is the stage where our warfare unfolds.

Nvidia: The Unquestioned King of AI Chips

If AI chips were Games of Thrones, Nvidia would be sitting on the Iron Throne and probably selling T-shirts.

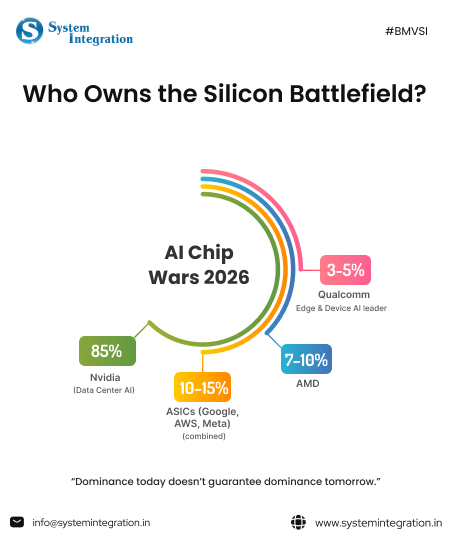

As per the article by All About AI on AI Chip Market Statistics, In 2025, Nvidia commanded somewhere between 80%-92% of the AI accelerator market, especially in high-end data centers that train massive models.

And they aren’t slacking in 2026 either. Their new Vera Rubin architecture and next-gen AI platforms are rolling into full production, boasting multiple specialized chips tailored for everything from raw compute to networking and data movement.

Some stats to get clarity:

- As per Sezarr Overseas News, Nvidia’s AI-related revenue has exploded, fueling quarterly revenues up to $57B+ in 2025, largely from data center AI workhorses.

- As per the OECD report, their ecosystem isn’t just hardware, CUDA software has become the default toolkit for AI researchers and engineers worldwide, creating a powerful moat that’s tough to leapfrog.

Bottom Line: Nvidia isn’t just selling chips, it’s selling the rules of engagement in AI hardware.

AMD: The challenger with something to prove

For years, AMD’s been the underdog to Nvidia’s GPU dominance. In gaming GPUs and CPUs, AMD has carved out a stubborn niche but AI chips are a different blast.

Still, as stated in The Guardian’s Article, “AMD’s Instinct AI accelerators and partnerships (for example, a multibillion-dollar deal with OpenAI to supply MI450 chips) signal serious ambitions.”

Here’s the twist:

- As per the article by All About AI, AMD’s market share in AI accelerators is still small compared to Nvidia’s, but growth is real, estimates put it in the mid-single digits (~5–10%) as of 2025.

- Analysts see AMD nibbling away at Nvidia’s dominance, especially where massive memory and specific performance traits matter.

Think of AMD as the plucky rebel in a sci-fi saga: underestimated but persistent and strategic.

Bottom Line: AMD isn’t the boss yet but it’s no longer a background extra either.

Qualcomm: From Mobile Mojo to AI Muscle

Let’s talk about a surprise contender.

Qualcomm isn’t traditionally a data-center juggernaut. They’re the behind-the-scenes heroes powering nearly every Android phone. But in 2026, they’re flexing serious AI muscle.

As stated in Windows Central, “Their Snapdragon X2 Plus and upcoming AI200/AI250 accelerator chips are aimed at making AI usable not just in phones, but in laptops and even server racks, with very efficient performance and multi-hundreds of TOPS (tera operations per second), especially on the inference side of AI workflows.”

Rather than trying to wrestle Nvidia for training dominance, Qualcomm’s strategy is:

- Build efficient edge and inference chips for mainstream devices.

- Focus on power efficiency, affordability, and real-world usage.

Bottom Line: Qualcomm may not be the heavyweight champion, but it’s creeping into the ring with stylish footwork and speed.

Specialized ASICs: The Silent, Deadly Ninjas

Most people’s minds jump to GPUs when they think of AI chips but in the shadows, ASICs are quietly growing.

Companies like Google (TPU), AWS (Trainium, Inferentia), and custom silicon teams at Meta, Microsoft, and others are building chips specifically tuned for AI workloads. These ASICs are wildly efficient for the tasks they target, especially inference and large model runtimes.

Here’s the twist: in some corners of the AI market, ASICs are outperforming general-purpose GPUs in speed per watt, cost per task, or throughput.

They may be less flexible than GPUs, but in massive data centers where AI workloads are predictable, they make a ton of sense.

Bottom Line: ASICs don’t get the hype, but investors and engineers respect them.

The Real AI Chip War Nobody Talks About: POWER, COST & CONTROL

Here’s what rarely makes Headlines:

“Performance is no longer the biggest problem. POWER is.”

A single high-end AI GPU can draw 700-1000 watts of power under load. Multiply that by tens of thousands of chips, and suddenly AI expansion depends on:

- Electricity availability

- Cooling infrastructure

- Regional power grids

In 2025–2026, several data center projects slowed not due to chip shortages, but power constraints.

This is why efficiency-focused players like Qualcomm and ASIC builders are gaining importance.

The future of AI isn’t just faster chips, it’s sustainable compute.

Why Nvidia still wins despite all this?

Even with power issues and rising competition, Nvidia stays ahead because:

- Most AI software is written for Nvidia first

- Engineers trust Nvidia’s tooling

- Switching costs are enormous

In infrastructure wars, momentum matters.

And Nvidia has a lot of it.

So…Who’s Actually Winning the AI Chip Wars?

The honest answer? Everyone and no one.

- Nvidia dominates training today

- AMD pressures pricing and choice

- Qualcomm owns edge and device AI

- ASICs quietly reshape hyperscale infrastructure

The real winners aren’t chip companies.

They’re the businesses that choose the right chip for the right workload.

Final Thoughts

The AI Chip Wars of 2026 are shaping up to be less like a two-actor duel and more like an ensemble epic. Sure, Nvidia still towers over the landscape but AMD’s strategic gains, Qualcomm’s mobile-to-AI pivot, and the stealth efficiency of ASICs all make this fight dynamic, unpredictable, and insanely exciting.

So next time you hear someone ask, “What is an AI chip?”, you can answer like a pro and maybe even predict who’s going to win the next round of this silicon saga.

FAQs

An AI chip is a specialized processor designed to handle artificial intelligence workloads like machine learning, deep learning, and neural networks. Unlike traditional CPUs, AI chips are optimized for parallel processing, matrix calculations, and high-speed data movement making them far more efficient for AI tasks such as training and inference.

In 2026, AI models are larger, faster, and more power-hungry than ever. AI chips make it possible to run these models at scale whether in cloud data centers, edge devices, or consumer electronics. Without AI chips, modern applications like generative AI, autonomous vehicles, and real-time analytics would be too slow or too expensive to operate.

Nvidia dominates the AI chip market because it combines powerful hardware with a mature software ecosystem. Its CUDA platform has become the industry standard, and most AI frameworks are optimized for Nvidia GPUs first. This creates high switching costs, making Nvidia the default choice for AI training in data centers.

There is no single “better” option, it depends on the use case.

- Nvidia AI chips excel in large-scale AI training and ecosystem maturity.

- AMD AI chips offer competitive performance and cost efficiency, especially for enterprises looking to reduce dependency on Nvidia.

The real decision is less about benchmarks and more about software compatibility, cost, and long-term infrastructure strategy.

Qualcomm focuses on edge and device-level AI, not massive data center training. Its AI chips power smartphones, laptops, cars, and IoT devices, where low power consumption and real-time inference matter most. Qualcomm’s strength lies in making AI run locally, without relying on the cloud.

Not entirely. ASICs (Application-Specific Integrated Circuits) are designed for specific AI tasks and are highly efficient, especially at scale. Hyperscalers like Google, AWS, and Meta use ASICs to reduce cost and power consumption. However, GPUs remain more flexible and are still preferred for research and rapidly changing AI workloads.

AI chips power a wide range of applications, including:

- Autonomous driving systems

- Medical imaging and diagnostics

- Fraud detection and cybersecurity

- Recommendation engines

- Voice assistants and real-time translation

AI chips are the invisible engine behind most “smart” technology today.

High-end AI chips can consume 700–1000 watts per chip, making electricity and cooling major constraints. As AI adoption grows, data centers are increasingly limited by power availability rather than hardware supply. This is why efficiency-focused designs and edge AI chips are becoming more important.

Major tech companies like Google, Amazon (AWS), Meta, and Microsoft are developing custom AI chips to reduce reliance on third-party vendors and optimize performance for their platforms. These in-house chips are typically ASICs designed for specific AI workloads.

There is no single winner:

- Nvidia leads in AI training

- AMD is gaining ground as a strong alternative

- Qualcomm dominates edge and device AI

- ASICs are reshaping hyperscale infrastructure

The real winners are organizations that choose the right AI chip for the right workload, rather than chasing raw performance alone.